DSP can be separated into 2 categories :

- Fixed point precision

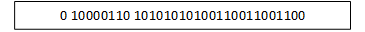

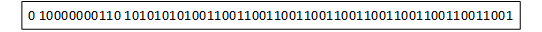

- Floating point [ Single (32-bits) / Double (64-bits) ] precision

Fixed point DSPs are designed to represent and manipulate integers, positive and negative whole numbers typically via minimum of 16-bits yielding up to 2^16 possible bit patterns. In Fixed point the numbers are represented with a fixed number of digits after and sometimes before the decimal point.

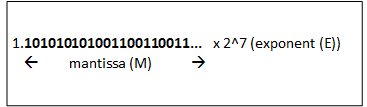

Floating point DSPs, on the other hand, represent and manipulate rational numbers via a minimum of 32-bits where the number is represented with a mantissa and an exponent yielding up to 2^32 bit patterns. In floating point, the placement of the decimal point can float relative to the significant digits of the number.

Floating point processors can support a much wider dynamic range of values than fixed point with the ability to represent very small numbers and very large numbers. They yield much greater precision than fixed-point processing and are ideally suited for computationally intensive applications or when computational accuracy is a critical requirement.

Each time a DSP generates a new number via a mathematical calculation that number must be rounded to the nearest value that can be then stored. Rounding &/or truncating numbers during signal processing naturally yields to quantization error or ‘noise’. Since the gaps between adjacent numbers can be much larger with fixed-point when compared to floating-point processing, round-off error can be much more pronounced.

It is generally easier to develop algorithms for floating-point DSPs as fixed-point algorithms require greater manipulation to compensate for quantization noise. Designers typically choose floating-point DSPs when implementing complex algorithms. Fixed point filters are commonly used in DSPs where data storage and power consumption are key limiting factors.

With the constraints we specify, Filter Builder App of the DSP System toolbox + Fixed-Point Designer toolbox software allows us to design efficient fixed-point filters.

Filter can be designed first for floating-point (single/double precision) input to obtain a baseline. Can then use the baseline for comparison with the fixed-point filter.

The filter for this example is a lowpass Equiripple FIR filter with the following specification :

- Sample rate: 2kHz

- Centre frequency: 650Hz

- Transition Width: 100Hz

- Equiripple Design

- Maximum 1db of ripple in the passband

- Minimum 80db of attenuation in the stopband

For a Centre frequency of 650Hz and Transition width of 100Hz :

- Passband frequency : 600Hz

- Stopband frequency : 700Hz

‘Filter Builder App’ is only installed when installing System DSP toolbox. When ‘System DSP toolbox’ is installed for MATLAB home edition, it automatically installs also ‘Signal Processing toolbox’ + ‘Filter Designer’ app.

To start designing the Filter using the ‘Filter Builder’ App, under APPS can click on the ‘Filter Builder’ and then select ‘Lowpass’.

It will be designed initially as a floating-point double precision data type :

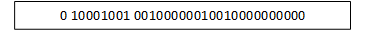

Floating-point double precision (64-bits) data type

Within the 'Main' menu :

Save the variable as: LPF_Double_Precision

Filter specifications :

- Impulse response: FIR

- Order mode: Minimum

- Filter type: Single-rate

Frequency specifications :

- Frequency units: kHz

- Input sample rate: 2

- Passband frequency: 0.6

- Stopband frequency: 0.7

Magnitude specifications :

- Magnitude units: dB

- Passband ripple: 1

- Stopband attenuation: 80

Algorithm :

- Design method: Equiripple

- Design options (can leave default values here)

Filter implementation :

- Structure: Direct-Form FIR

Within the Data types menu :

- Arithmetic : Double precision

Need to select Apply button to apply the updated Main & Data Types settings before visualizing the design. Then select View Filter Response

This will display automatically the Magnitude Response (dB) because the Analysis option has already Magnitude Response (dB) option ticked by default. Under Analysis can also view manually other filter characteristics such as :

- Phase Response

- Magnitude and Phase Responses (together)

- Impulse Response

- Pole-Zero plot

- Filter Coefficients

- Filter Information

- etc

Filter Information displays things such as :

- Filter structure

- Filter Length

- Stable or not

- Linear Phase or not

- Design Algorithm

- Design Options

- Design Specifications

- Measurements

- Implementation Cost

From the Measurements info can see whether it met the design specification requirements. The Filter Structure displays the filter structure eg Direct-Form FIR, the Filter length, stability & linear phase status. The Implementation Cost displays the number of Multipliers/Adders/States used + Multiplications/Additions per Input Sample (ie it estimates the computational complexity).

In this example the Measurements, Filter Structure & Implementation Cost results of this Equiripple LPF FIR were :

Measurements :

- Sample Rate: 2kHz => o.k

- Passband Edge: 600Hz => o.k

- 3-dB point: 616.3011Hz

- 6-dB point: 628.1421Hz

- Stopband Edge: 700Hz => o.k

- Passband Ripple: 0.96726dB < 1dB => ok

- Stopband Attenuation: 80.0905dB > 80dB => o.k

- Transition Width: 100Hz => ok

Filter Structure & it’s properties :

- Filter Structure : Direct-Form FIR

- Filter Length : 51

- Stable : Yes

- Linear Phase : Yes (Type 1)

Implementation Cost :

- Number of Multipliers: 51

- Number of Adders: 50

- Number of States: 50

- Multiplications per Input Sample: 51

- Additions per Input Sample: 50

Once satisfied with the Filter results,

Within the Code Generation menu :

Can generate MATLAB code (getFilter.m) based on filter specifications by selecting ‘Generate MATLAB code…’ option & leaving ‘Generate function that returns your filter as an output’ default option ticked.

Can also enquire automatically within the generated getFilter.m code the above results by adding manually the measure / info & cost functions. Then can also add the fvtool function to visualize the resulting design.

Here is the generated MATLAB code in a .pdf file that illustrates the above :

https://usercontent.one/wp/www.kevnugent.com/wp-content/uploads/2020/10/FIR_LPF_Floating_Double_Precision.pdf?media=1712708755

Floating-point single precision (32-bits) data type

Same steps were followed as above apart from the following :

Within the 'Main' menu :

Save the variable as: LPF_Single_Precision

Within the Data types menu :

- Arithmetic : Single precision

In this example the Measurements, Filter Structure & Implementation Cost results were identical and not much difference in Magnitude response between Single-Precision and Double Precision.

Here is the generated MATLAB code in a .pdf file that illustrates the above :

https://usercontent.one/wp/www.kevnugent.com/wp-content/uploads/2020/10/FIR_LPF_Floating_Single_Precision.pdf?media=1712708755

Fixed-point full precision data type (initial default settings)

Note: ‘Fixed-point Designer’ toolbox is required here for the ‘Fixed point’ option to be visible under the Data types Arithmetic menu.

Within the 'Main' menu leave same settings as above apart from :

Save the variable as: LPF_Fixed_Point

Within the Data types menu :

Arithmetic : Fixed point

Fixed point data types : (leave all default values here)

Input Word Length: 16 (default value)

Input Frac Length: 15 (default value)

Coefficient Word Length: 16 (default value)

Filter intervals : Full Precision (default value)

Select the Apply button to apply the updated Main & Data Types settings before visualizing the design. Then select View Filter Response

In this example the Measurements/Implementation Cost results of this Equiripple LPF FIR were :

Measurements :

- Sample Rate: 2kHz => o.k

- Passband Edge: 600Hz => o.k

- 3-dB point: 616.304Hz

- 6-dB point: 628.144Hz

- Stopband Edge: 700Hz => o.k

- Passband Ripple: 0.9685dB < 1dB => ok

- Stopband Attenuation: 72.576dB < 80dB => Not o.k

- Transition Width: 100Hz => ok

Filter Structure & it’s properties :

- Filter Structure : Direct-Form FIR

- Filter Length : 51

- Stable : Yes

- Linear Phase : Yes (Type 1)

- Arithmetic : fixed

- Filter Internals : Full Precision

- Round Mode : No rounding

- Overflow Mode : No overflow

- etc

Implementation Cost :

- Number of Multipliers: 51

- Number of Adders: 50

- Number of States: 50

- Multiplications per Input Sample: 51

- Additions per Input Sample: 50

This fails the Stopband Attenuation requirement of 80dB

Here is the generated MATLAB code in a .pdf file that illustrates the above :

https://usercontent.one/wp/www.kevnugent.com/wp-content/uploads/2020/10/FIR_LPF_Fixed_Point_original.pdf?media=1712708755

Fixed-point full precision data type (increasing the transition width from 100dB ->200dB)

If we want a high minimum stopband attenuation without compromising on the number of coefficient bits, we must relax the other filter design constraint: the transition width

Increasing just the transition width from 100kHz -> 200kHz within the Main menu as follows :

- Passband frequency: 0.55 (Frequency units: kHz)

- Stopband frequency: 0.75

and the same coefficient length of 16, enabled us to get a higher Stopband Attenuation of 73.0869dB but this is still < 80dB ie it still misses out the spec requirements for stopband attenuation.

For FIR filters in general, each bit of coefficient word length provides approximately 4.5 – 5dB of stopband attenuation. However, it was almost impossible to achieve more than 4.5dB per bit coefficient word length in this example, even after relaxing the transition width (as illustrated here).

The filter length in this instance also decreased from 51 to 27 implying that fewer taps are required to implement this new FIR filter.

Here is the generated MATLAB code in a .pdf file that illustrates the above :

https://usercontent.one/wp/www.kevnugent.com/wp-content/uploads/2020/10/FIR_LPF_Fixed_Point_incr_transition_width.pdf?media=1712708755

Fixed-point full precision data type (increasing the coefficient length from 16bits -> 24bits)

If the coefficient word length is flexible, within the Main menu we leave the original default settings i.e

- Passband frequency: 0.6 (Frequency units: kHz)

- Stopband frequency: 0.7

and within the Data Types we can increment the Coefficients word length from 16bits -> 24bits.

Increasing the number of bits allowed for the coefficient word length makes the quantization error smaller and enables us to match the design requirement for 80dB of Stopband Attenuation. For a coefficient word length of 24bits a Stopband Attenuation of 80.0904dB was achieved as shown in the following attached modified regenerated MATLAB code within the .pdf file :

https://usercontent.one/wp/www.kevnugent.com/wp-content/uploads/2020/10/FIR_LPF_Fixed_Point_incr_coefficient_word_length.pdf?media=1712708755

Its worth mentioning that in many fixed point design applications, the coefficient word length is not flexible eg if we are restricted to work with 14bits, from the results shown below, the requested minimum Stopband Attenuation of 80dB cannot be reached! A filter with 14-bit coefficient quantization can achieve a minimum of only 62.7048dB after reviewing the Filter Information/Measurements: Stopband Attenuation results as shown below:

Measurements :

- Sample Rate: 2kHz => o.k

- Passband Edge: 600Hz => o.k

- 3-dB point: 616.3202Hz

- 6-dB point: 628.1535Hz

- Stopband Edge: 700Hz => o.k

- Passband Ripple: 0.97369dB < 1dB => ok

- Stopband Attenuation: 62.7048dB < 80dB => Not o.k

- Transition Width: 100Hz => ok

Here is the generated MATLAB code in a .pdf file that illustrates the above :

https://usercontent.one/wp/www.kevnugent.com/wp-content/uploads/2020/10/FIR_LPF_Fixed_Point_decr_coefficient_word_length.pdf?media=1712708755